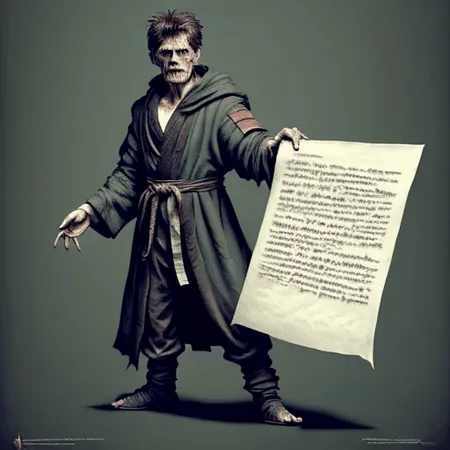

mania - concept trained without data (local install tutorial - technical)

v1.0

Trained for 2000 steps with: "#:0.41|~maniacal laughter:0.2|^maniacal laughter:0.05" using https://github.com/ntc-ai/conceptmod

animations with lora value from 0.0 to 1.8. All examples use the trigger phrase: "maniacal laughter"

Words are pale shadows of forgotten names. As names have power, words have power. Words can light fires in the minds of men stable diffusion models.

Tutorial:

This tutorial is technical. For a much easier path, follow https://civitai.com/models/58873/conceptmod-tutorial-fire-train-any-lora-with-just-text-no-data-required and run on runpod. It's cheap. $5 to train a model and <$1 to create animations.

Local installation (technical)

Requires 20 GB vram

0) Install a111

1) git clone https://github.com/ntc-ai/conceptmod.git

2) cd conceptmod

3) edit train_sequential.sh and add your training phrase (see above)

4) install dependencies (see https://github.com/ntc-ai/conceptmod#installation-guide ) and ImageReward https://github.com/THUDM/ImageReward

5) train the model with bash train_sequential.sh(takes a while)

6) once you are successfully training you will save a new checkpoint into models , there will be samples during training if you have enabled them in the samples directory.

7) mv the models to a a111 models path:

`mv -v models/*/*.ckpt ../stable-diffusion-webui2/models/Stable-diffusion/0new`

8) extract the lora with sd-scripts https://github.com/kohya-ss/sd-scripts (requires new conda env)

here is the script I use to create loras of everything in a directory. replace dir and basemodel with yours. Run it in the sd-scripts project.

https://github.com/ntc-ai/conceptmod/blob/main/extract_lora.sh

9) Start a111 webui.py with '--api'.

10) Create the animations

I used:

> python3 lora_anim.py -s -0.0 -e 1.8 -l "mania" -lp ", maniacal laughter" -np "nipples, weird image." -n 32 -sd 7 -m 2.0

If you create something with this, please tag it 'conceptmod'

-

React!: https://civitai.com/user/ntc/images?sort=Most+Reactions